If there are any vsyncologists out there… I am struggling to understand some very odd behavior. I made a simple class to insert a delay into each frame, in order to toggle the FPS up and down, have a switch for vsync on/off, and display the FPS and ‘nominal FPS’ on-screen. Nominal FPS is the FPS if ‘switch window buffer’ and ‘frame rate limiter’ are ignored. This way if vsync is on it’s easy to see what the FPS would be if vsync were off.

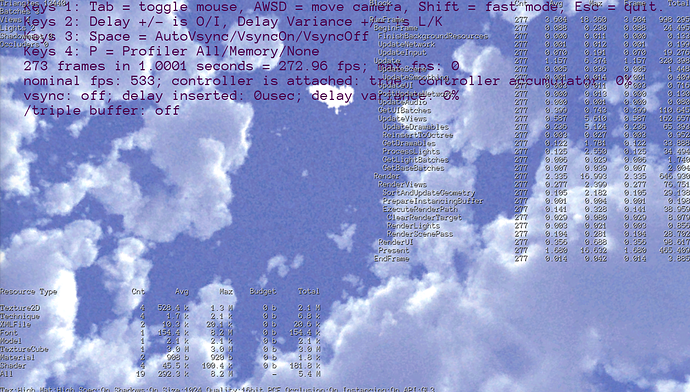

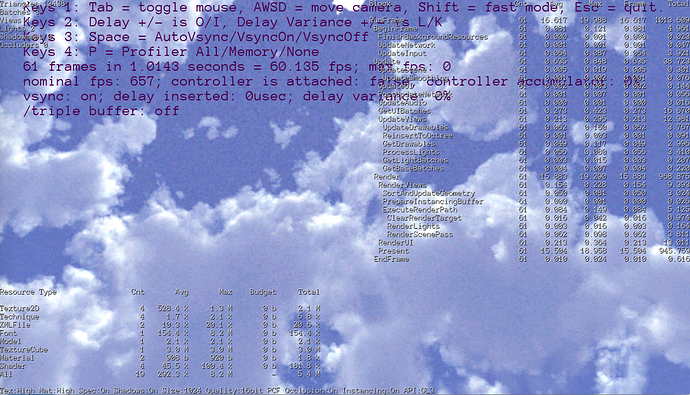

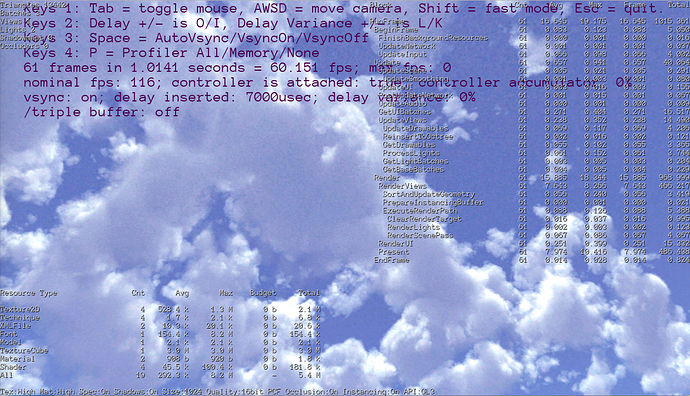

When a basic program starts up I get some really nice FPS, on the order of 1k FPS for optimized release builds, or around 330 FPS for debug builds. As expected, when vsync turns on the real FPS drops to 60 Hz. Adding some delay reduces the nominal FPS without affecting real FPS.

[Vsync off, no added delay]

[Vsync on, no added delay]

[Vsync on, 7ms added delay]

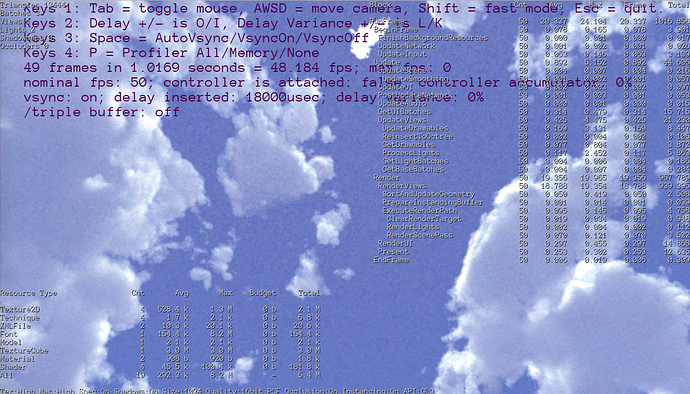

Now, the strange behavior appears when I drive the nominal FPS below 60Hz while vsync is on… The real FPS doesn’t drop to 30Hz, it stays the same! As far as I can tell, vsync is working normally at 50Hz, since with vsync off the FPS stays at 50Hz and screen tearing is evident. What is going on here? My graphics card is an old Intel HD Graphics 4000, and as far as I can tell triple buffering is off. I can’t believe my monitor refresh rate is changing… and GetSubsystem<Graphics>()->GetRefreshRate() is returning 60Hz constantly (I checked).

[Vsync on, added delay pushes nominal FPS to 50Hz]

Possible hint… I went to windowed mode and wiggled the window around, which caused ‘Present’ to spike dramatically and FPS to drop a bunch.

Note: I inserted the delay into ‘RenderViews’ as part of a failed experiment, its placement has no impact.

P.S. The Urho3D profiler is incredibly easy to use.