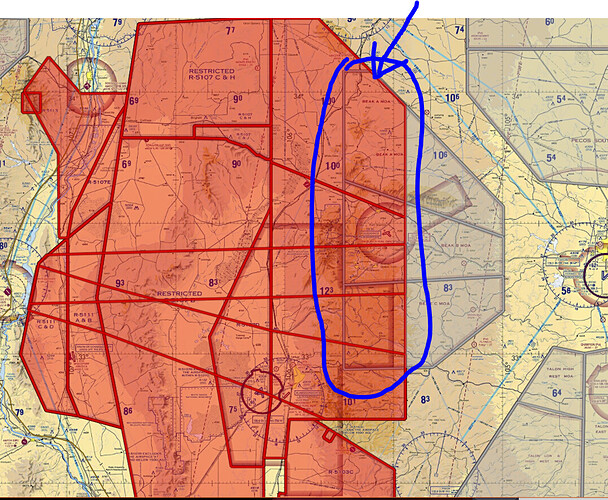

We have a map app which overlays airspaces onto a single transparent layer. The bottom layer Map is 100% opaque. The airspaces however, where they overlap, should NOT blend, but instead the last-to-render should dominate. See image included - it shows two airspaces, Gray & Red, which overlap. But as you can see the Red Layer is dominant, and so where they overlap (circled in Blue pen), the transparency is ONLY RED (and NOT a blend between Red + Gray).

To achieve this, we’ve implemented an ugly HACK. We placed a physical plane in 3D space in front of our camera, and then rendered these transparent objects to this Plane’s Texture (RTT) as 100% opaque (no blending). Then we take the resulting plane, and render it to the Main Camera, using a shader that makes it only 40% opaque.

This kludge hack is ugly/kludging. What we REALLY want is to just have a 2nd Camera that renders to an offscreen RenderBuffer, and then to render that buffer to the Main Camera using the 40% opacity shader.

We do not know how to render one Camera’s resulting RenderBuffer to another Camera directly.

(Thus we did the hack by placing a physical plane in the scene, positioned 1.866 Z units in front of the other camera… which is too kludgy, and giving us issues when Viewport size starts to change, etc).

So in short, we’d like to know how to:

- Have two camera in scene (one is the Main Camera, the other just renders to an offscreen Buffer).

- As final pass, render the 2nd camera’s Render Buffer into the Main Camera’s Render Buffer with 40% opacity.

Main thing is I don’t know how to tell the MainCamera to execute a pass like this.