I could make different images for different LODs, but for my case, I have LODs from 0 ~ 8. For example, I could make a low resolution image for the whole map (lod = 8), then maybe 4 with lod = 7. That’s the minimum number of images I need but that would occupy 5 texture units already! IIUC, we have only 16 texture units for certain. But I still needs other textures (e.g. normal, spec, several terrain details). If we have different images for different lod, we need the same number of image for normal, spec etc too.

I am not sure what’s the good solution for this.

Anyway, I tried to cut my source image into 4 pieces.

Looks like my computer is okay to load texture image with size <= 8192.

My texture image size is about two times larger. So I divided my image into 4 pieces. A very rough demo code:

const int kMaxSupportedSingleHeightMapSize = 8192;

for (int i = 0; i < 4; ++i) {

auto texture = SharedPtr<Texture2D>(new Texture2D(context_));

int index_x = i % 2;

int index_y = i / 2;

int x0 = std::max(0, index_x * kMaxSupportedSingleHeightMapSize);

int x1 = std::min(heightmap_image_->GetWidth() - 1, (index_x + 1) * kMaxSupportedSingleHeightMapSize);

int y0 = std::max(0, index_y * kMaxSupportedSingleHeightMapSize);

int y1 = std::min(heightmap_image_->GetHeight() - 1, (index_y + 1) * kMaxSupportedSingleHeightMapSize);

SharedPtr<Image> heightmap_test = SharedPtr<Image>(heightmap_image_->GetSubimage(IntRect(x0, y0, x1, y1)));

texture->SetFilterMode(FILTER_NEAREST);

texture->SetData(heightmap_test, false);

// I also changed those values, but does not make difference for my case.

std::vector<TextureUnit> units{TU_DIFFUSE, TU_NORMAL, TU_SPECULAR, TU_EMISSIVE,};

batches_[0].material_->SetTexture(/*texture unit*/units[i], texture);

}

And then I get different texture image for vertex displacement in the shader:

float GetHeight(vec2 pos) {

vec2 texCoord;

vec2 heights;

if (pos.x <= cMapSize.x && pos.y <= cMapSize.y) {

texCoord = vec2(pos.x / cMapSize.x, pos.y / cMapSize.y);

heights = texture(sHeightMap0, texCoord).rg;

} else if (pos.x > cMapSize.x && pos.y <= cMapSize.y) {

texCoord = vec2(pos.x / cMapSize.x - 1.0, pos.y / cMapSize.y);

heights = texture(sHeightMap1, texCoord).rg;

} else if (pos.x <= cMapSize.x && pos.y > cMapSize.y) {

texCoord = vec2(pos.x / cMapSize.x, pos.y / cMapSize.y - 1.0);

heights = texture(sHeightMap2, texCoord).rg;

} else if (pos.x > cMapSize.x && pos.y > cMapSize.y) {

texCoord = vec2(pos.x / cMapSize.x - 1.0, pos.y / cMapSize.y - 1.0);

heights = texture(sHeightMap3, texCoord).rg;

}

...

}

with the material definition

<material>

<technique name="Techniques/CdlodTerrain.xml" />

// units 0 ~ 3 reserved for heightmap images.

<texture unit="0" name="" />

<texture unit="1" name="" />

<texture unit="2" name="" />

<texture unit="3" name="" />

<texture unit="4" name="HeightMapsTest/DEM_print.png" />

<texture unit="5" name="Textures/TerrainDetail1.dds" />

...

</material>

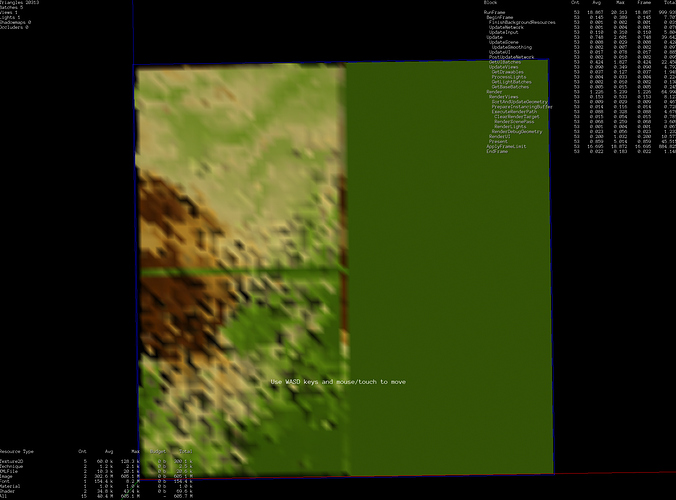

This cutting-image method works fine for a smaller heightmap.

But for my case, some textures do not show. It looks like they are unloaded automatically somehow.

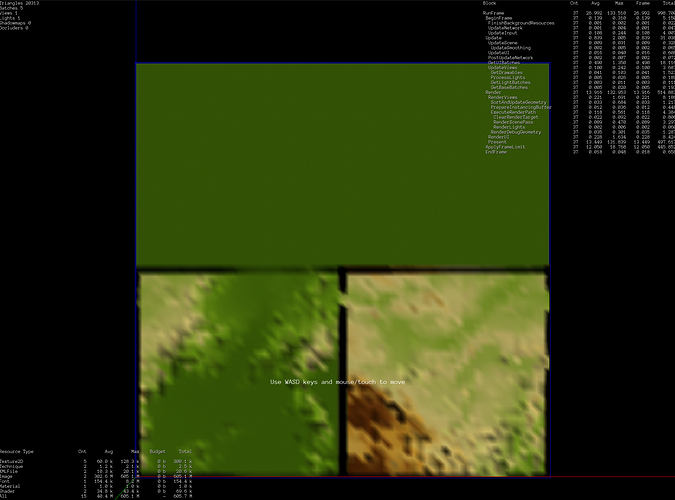

If I randomly exchange the order of heightmap in shader, for example:

float GetHeight(vec2 pos) {

...

heights = texture(sHeightMap3, texCoord).rg;

...

heights = texture(sHeightMap2, texCoord).rg;

...

heights = texture(sHeightMap1, texCoord).rg;

...

heights = texture(sHeightMap0, texCoord).rg;

...

}

Different texture images will be remained. The bottom-left is a different one.

(don’t mind the edges, it’s not there yet)

So I think it’s the OpenGL automatically unloading texture if they are taking too much memory? BTW: my source heightmap texture image is ~170MB in png (size ~ 16k pixels).

But how can I verify that and is there any way from Urho3D API to query which texture is loaded/unloaded in real time?

Or what other way should I address this problem overall?