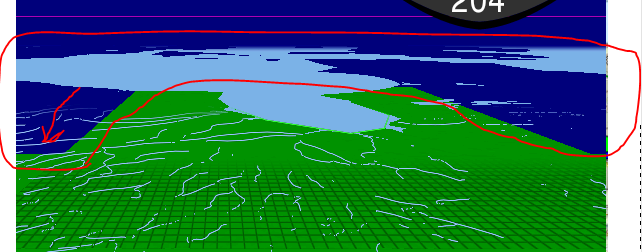

I am posting to just confirm something I think I learned today. It appears to me that DepthBias behaves entirely different between DirectX and OpenGL. For OpenGL, a constant depthBias of 0.001 made our Far Terrain shift by a LOT, while in DirectX this same 0.001 setting only shift’s it a small amount (and looks good).

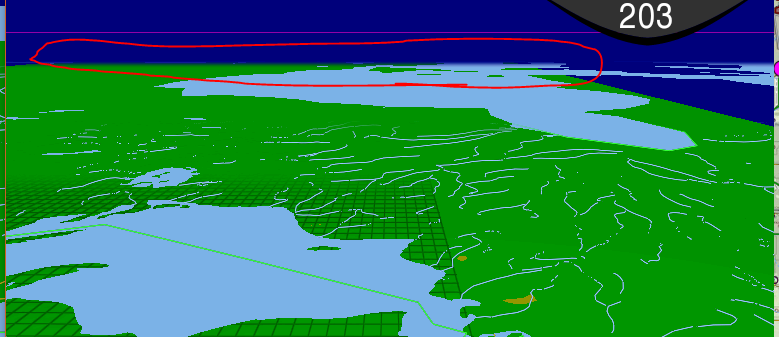

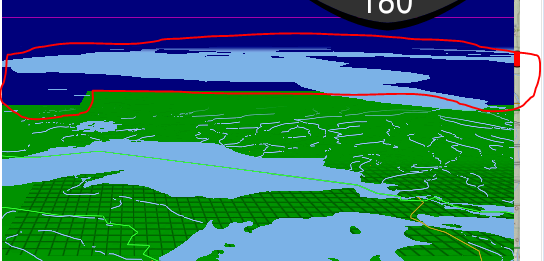

In short, we have near and far terrain in our 3D view… the far terrain shows much less detail, and so we put it UNDER the near Terrain, so that you don’t see the Far Terrain until it’s beyond the end of where near terrain ends.

For some reason, on OpenGL, the DepthBias of constant 0.001 if AMPLIFIED A LOT, compared to DirectX. I would have expected that Urho3D might have made them behave similarly.

Code looks like this:

BiasParameters s_FarBias = new BiasParameters() { ConstantBias = 0.001f };

Our solution, tentatively, is to vary the ConstantBias by an order of magnitude to make OpenGL behave similarly to DirectX.

Can someone explain the best-practice for how a developer should be dealing with this?